Integrations

Overview of Integrations

Learn about the types of integrations that Narrator supports.

Integration Types

Any dataset can be exported for a one-time download or ongoing integration. The integration options are summarized below:

| Integration Type | Description | Update Cadence | Who can add one |

|---|---|---|---|

| Materialized View | Creates a materialized view in your warehouse | Ongoing, according to cron schedule configuration | Admin Only |

| View | Creates a view in your warehouse | Ongoing, according to cron schedule configuration | Admin Users |

| Google Sheet | Saves data to a specific sheet on a google sheet | Ongoing, according to cron schedule configuration | All User Types |

| Webhook | Sends your data to a custom URL | Ongoing, according to cron schedule configuration | Admin Only |

| Email CSV | Emails dataset as a CSV (useful for datasets with more than 10,000 rows) | Ongoing, according to cron schedule configuration | All User Types |

| Klaviyo/Sendgrid (BETA) | Sends a list of users to your email service provider, and updates any custom properties using dataset column values | Ongoing, according to cron schedule configuration | All User Types |

CSV Download

To download a one-time csv export, click the dropdown menu on the dataset tab that you wish to export.

Materialized View

Go to integrations and add a materialized view

Fields

| Field | Description |

|---|---|

| Pretty Name | The name of the table that will be created |

| Parent or group | The data of the table you want to materialize |

| Cron Tab | How often do you want the materialized view to be reprocessed |

| Time column (optional) | A timestamp column that used for incremental updates |

| Days to Resync (optional) | The days from today that will be deleted and reinserted every sync. |

Narrator supports incremental materialization, so every run Narrator will delete all the data where the Time Column is greater than Days to Resync from now. Then it will reprocess and insert all data from that date.

When to use incremental materialization?

If historical data is not changing much and you want to save computing power then incrementally materialize.

Where is the materialized view in my warehouse?

Materialized views are created in your Materialize Schema which can be found on the company page (defaulted to dw_mvs). Where is the company page?

Useful for:

- Building dashboards in your BI tool

- Creating tables for analysis in your warehouse

How to: Materialize a Dataset

Watch this step-by-step tutorial to schedule a materialized view of a dataset in your warehouse

View

Creates a view in your warehouse

Fields

| Field | Description |

|---|---|

| Pretty Name | The name of the view that will be created |

| Parent or Group | The data of the table you want to create as a view |

Where is the view in my warehouse?

Views are created in your Materialize Schema which can be found on the company page (defaulted to dw_mvs). Where is the company page?

Useful for:

- Adding data that is always up to date to a BI tool

- Creating datasets that are used in your warehouse for analysis

Google Sheet

Fields

| Field | Description |

|---|---|

| Pretty Name | The name of the sheet that will be created |

| Parent or Group | The data that will be synced to the google sheet |

| Cron Tab | How often do you want the google sheet synced |

| Sheet Key | The key to the sheet |

How do I find the sheet key?

- Go to the google sheet you want to set up the integration for

- Look at the URL of the sheet

- The sheet key is right after

/d/and before the next/(see below)

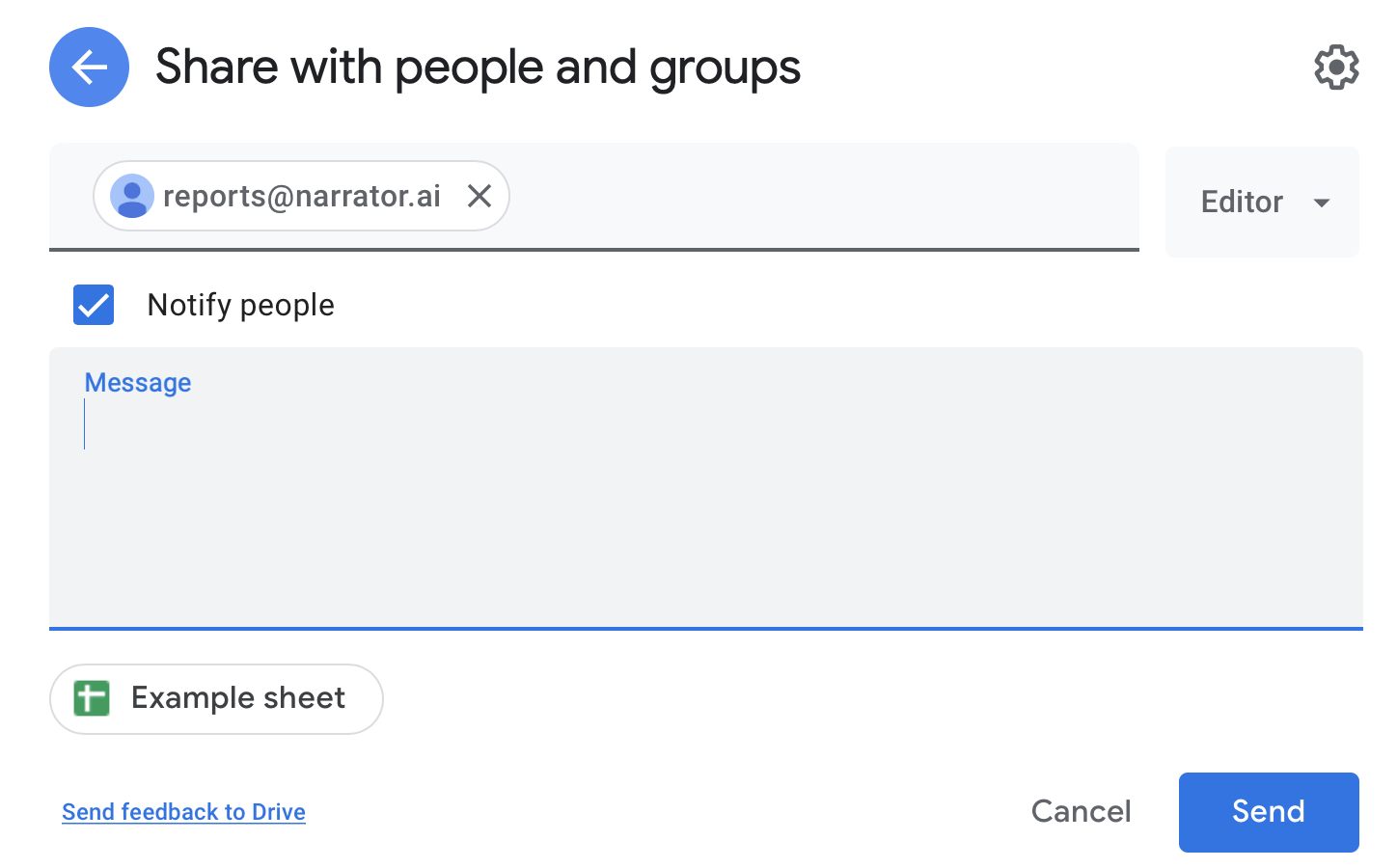

**You must grant `reports@narrtor.ai` access to the sheet**

Don't forget to share the google sheet with reports@narrator.ai

Useful for:

- Quick excel models that are always up to date

- Building lightweight excel dashboards

- Sharing data with a team

How to: Sync Your Dataset to a Google Sheet

Watch this step-by-step tutorial to sync your data to a google sheet.

Webhook

Sends your data to a custom URL

Fields

| Field | Description |

|---|---|

| Pretty Name | The name of the webhook |

| Parent or Group | The data of the table you want sent to the webhook |

| Cron Tab | How often do you want the data to be sent |

| Rows to Post | The max number of rows that will be posted per webhook request |

| Max Retries | The number of retries that Narrator will try after a failed (Status code of 408, 429, >500) POST request |

| Webhook URL | The URL of the webhook that data will be POSTed to |

| Auth | Narrator supports 3 type of auth: basic auth - username and password Token - Adds a Authorization: Bearer {TOKEN} to the headeror Custom Headers: where you can add any key, value pair to your headers |

Auth is encrypted and saved independently

Auth headers are encrypted and saved independently so there is no way for the UI to retrieve them. You can always update them.

We do this to ensure the highest level of security by isolating critical information.

Each request will follow the structure below:

{

"event_name": "dataset_materialized",

"metadata":{

"post_uuid":"[UUID]",

"dataset_slug":"[SLUG]",

"group_slug": "[SLUG]"

},

"records":[

...

],

}

from pydantic import BaseModel, Field

from typing import List

class WebhookMeta(BaseModel):

post_uuid: str

dataset_slug: str

group_slug: str = None

class WebhookInput(BaseModel):

event_name: str

metadata: WebhookMeta

records: List[dict]

post_uuid: A unique identifier for the set of posts.

- If you are syncing 10,000 rows with a

Rows to Postof 500. The webhook will be fired 20 times back to back and all 20 posts will use the samepost_uuid

dataset_slug: A unique identifier of the dataset

group_slug: A unique identifier of the group int he dataset

records: An array of dictionaries where the key is the snake cased column name and the value is the value of the column.

All webhooks are sent from one of Narrator's static IPS: Narrator's Static IPs

Tips:

- Add query params in the URL to pass fields in dynamically

- Add a full loop and remove the data from the dataset ( ex. if you use a dataset to send an email, add the received email to the dataset and remove people who have already received it so your webhook does not send multiple emails to the same customer)

- Subscribe to the task in

Manage Processingso you can be alerted if any issues arise.

Useful for:

- Having emails lists sent to Mailchimp for email campaigns

- Removing or mutating lists in Salesforce

- Sending the aggregations to Zapier and having that send that data anywhere.

- Quick Data Science Models that process new data

Email CSV

Emails dataset as a CSV (useful for datasets with more than 10,000 rows)

Fields

| Pretty Name | The name of the file that will be attached to the email |

| Parent or Group | The data that will be sent as a CSV |

| Cron Tab | How often you want to receive this attachment |

| User Emails | List of users receiving the email |

Klaviyo/Sendgrid (BETA)

Sends a list of users to your email service provider, and updates any custom properties using dataset column values.

Fields

| Pretty Name | The name of the integration (useful for when you look at the processing view in Narrator) |

| Parent or Group | The data that will be sent to your Email Service Provider |

| Cron Tab | How often you want to sync this list *See API Limitation below |

| List Url | The url of the contact list in the Email Service Provider |

| Api Key | An API key from the Email Service Provider |

How do I find the List Url (Klaviyo)?

- Go to

Lists & Segments - Grab the Url from the individual list page

- It should look something like: "https://www.klaviyo.com/list/.../list-slug-name"

How do I find the Api Key (Klaviyo)?

- Go to your

Accountpage - Go to

Settings/API Keys - Create a Private API Key for Narrator

API Limitation for larger datasets

Klaviyo's API allows for only 100 emails updated at once. If you have a list of greater than 10k emails, it will take a while to update, so be wary of how often you schedule the update.

See tips below for how to get around this limitation

Tips:

- Use timestamp filters in dataset to reduce the number of users that get synced over

- If you only care about recent activity, don't add users to the list if the last time they did an activity was > 1 month ago

- You can add an activity in Narrator for

Added to Listthat represents when a user was updated in a list and then use that activity to remove people based on the last time they were updated

- All columns from the dataset are sent to the Email Service Provider as custom properties. If you don't want those added to your user profiles, hide the columns in the dataset before triggering the integration.

Still have questions?

Our data team is here to help! Here are a couple ways to get in touch...

💬 Chat us from within Narrator

💌 Email us at support@narrator.ai

🗓 Or schedule a 15 minute meeting with our data team

Updated over 3 years ago